Relevance Topic Model for Unstructured Social

Group Activity Recognition

|

| Unstructured social group activities |

People

Fang Zhao

Yongzhen Huang

Liang Wang

Tieniu Tan

Overview

Unstructured social group activity recognition in web videos is a challenging task due to 1) the semantic gap between class labels and low-level visual features and 2) the lack of labeled training data. To tackle this problem, we propose a "relevance topic model" for jointly learning meaningful mid-level representations upon bag-of-words (BoW) video representations and a classifier with sparse weights. In our approach, sparse Bayesian learning is incorporated into an undirected topic model (i.e., Replicated Softmax) to discover topics which are relevant to video classes and suitable for prediction. Rectified linear units are utilized to increase the expressive power of topics so as to explain better video data containing complex contents and make variational inference tractable for the proposed model. An efficient variational EM algorithm is presented for model parameter estimation and inference. Experimental results on the Unstructured Social Activity Attribute dataset show that our model achieves state of the art performance and outperforms other supervised topic model in terms of classification accuracy, particularly in the case of a very small number of labeled training videos.

Paper

|

Relevance Topic Model for Unstructured Social Group Activity Recognition Fang Zhao, Yongzhen Huang, Liang Wang, Tieniu Tan Neural Information Processing Systems(NIPS 2013) |

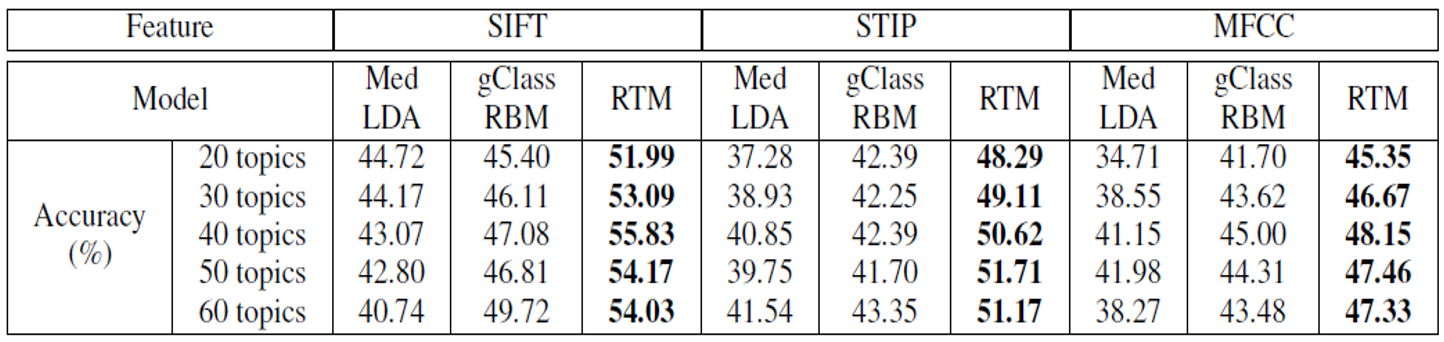

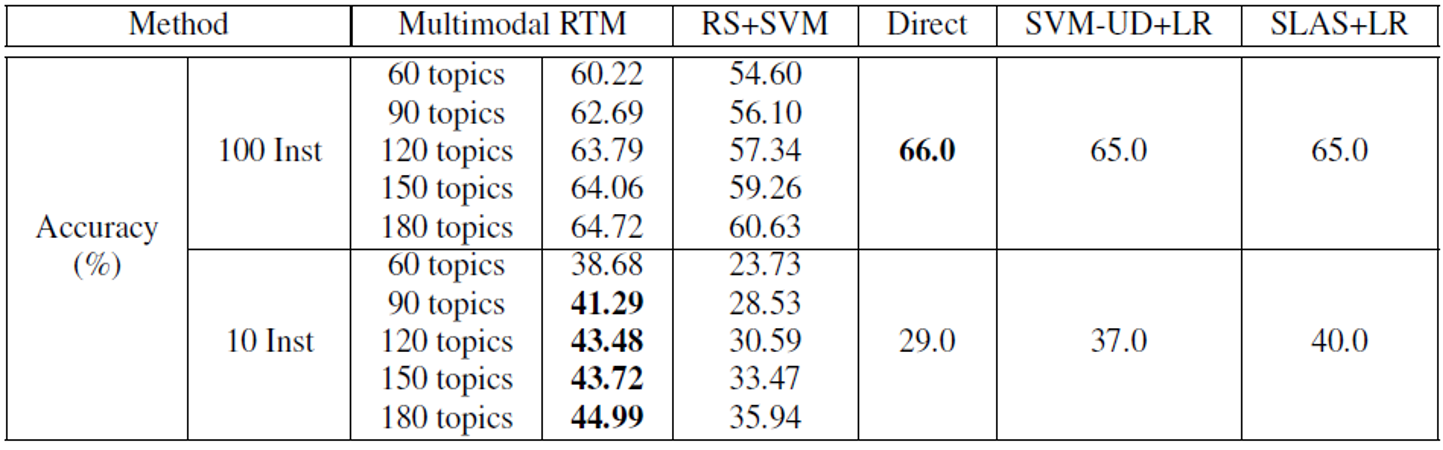

Classification accuracy

|

| Classification accuracy of different supervised topic models for single-modal features |

|

| Classification accuracy of different methods for multimodal features |

Acknowledgments

This work was supported by the National Basic Research Program of China (2012CB316300), HundreTalents Program of CAS, National Natural Science Foundation of China (61175003, 61135002, 61203252), and Tsinghua National Laboratory for Information Science and Technology Crossdiscipline Foundation.